Kejia Ren

Department of Computer Science

Rice University, Houston, TX, USA

Contact: kr43 [at] rice.edu

Department of Computer Science

Rice University, Houston, TX, USA

Contact: kr43 [at] rice.edu

I am a final-year Ph.D. student in Computer Science at Rice University, advised by Prof. Kaiyu Hang in the Robotics and Physical Interactions (RobotΠ) Lab. My research interests lie in planning and control of contact-rich, physics-intensive robotic manipulation, leveraging informed search, constrained optimization, and learning.

During my Ph.D., I have worked closely with Prof. Lydia E. Kavraki at Rice University and Dr. Andrew S. Morgan at the Robotics and AI (RAI) Institute (formerly Boston Dynamics AI Institute), where I was a research intern in Spring 2025. Prior to Rice, I received my B.E. degree in Vehicle Engineering from Tongji University and M.S.E. degree in Robotics from Johns Hopkins University.

* indicates equal contribution.

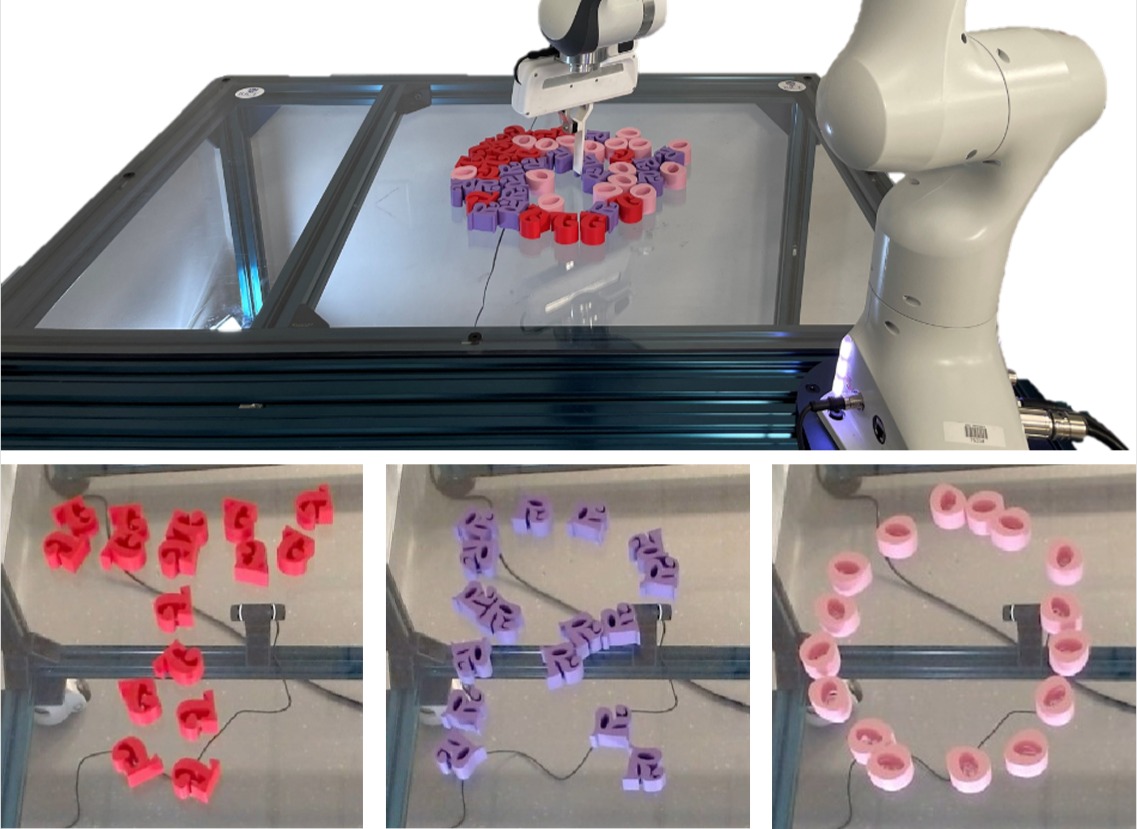

Object-Centric Kinodynamic Planning for Nonprehensile Robot Rearrangement Manipulation

Nonprehensile actions such as pushing are crucial for addressing multi-object rearrangement problems. Many traditional methods generate robot-centric actions, which differ from intuitive human strategies and are typically inefficient. To this end, we adopt an object-centric planning paradigm and propose a unified framework for addressing a range of large-scale, physics-intensive nonprehensile rearrangement problems challenged by modeling inaccuracies and real-world uncertainties. By assuming each object can actively move without being driven by robot interactions, our planner first computes desired object motions, which are then realized through robot actions generated online via a closed-loop pushing strategy. Through extensive experiments and in comparison with state-of-the-art baselines in both simulation and on a physical robot, we show that our object-centric planning framework can generate more intuitive and task-effective robot actions with significantly improved efficiency. In addition, we propose a benchmarking protocol to standardize and facilitate future research in nonprehensile rearrangement.

@article{ren2024object,

title={Object-Centric Kinodynamic Planning for Nonprehensile Robot Rearrangement Manipulation},

author={Ren, Kejia and Wang, Gaotian and Morgan, Andrew S and Kavraki, Lydia E and Hang, Kaiyu},

journal={arXiv preprint arXiv:2410.00261},

year={2024}

}

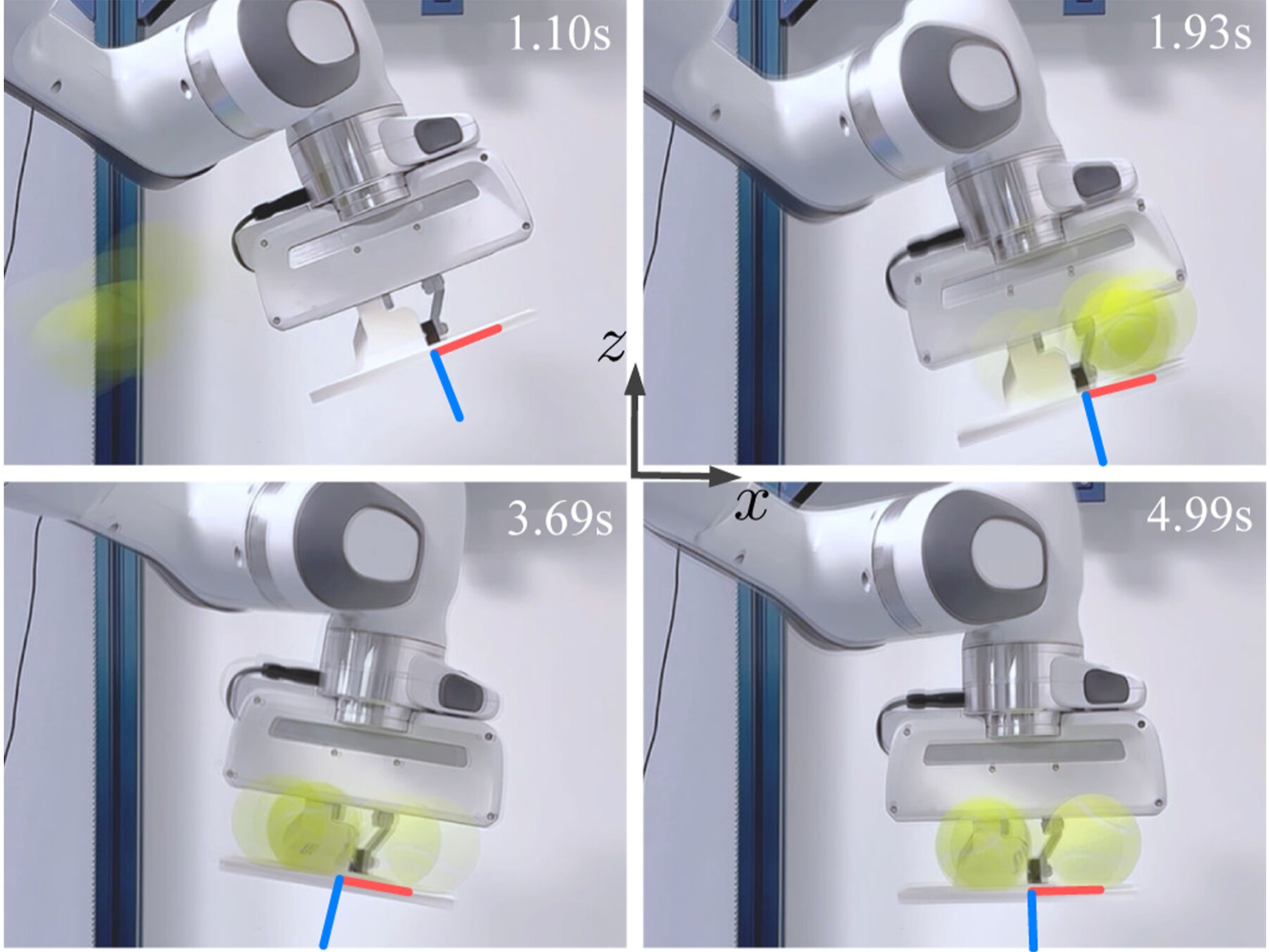

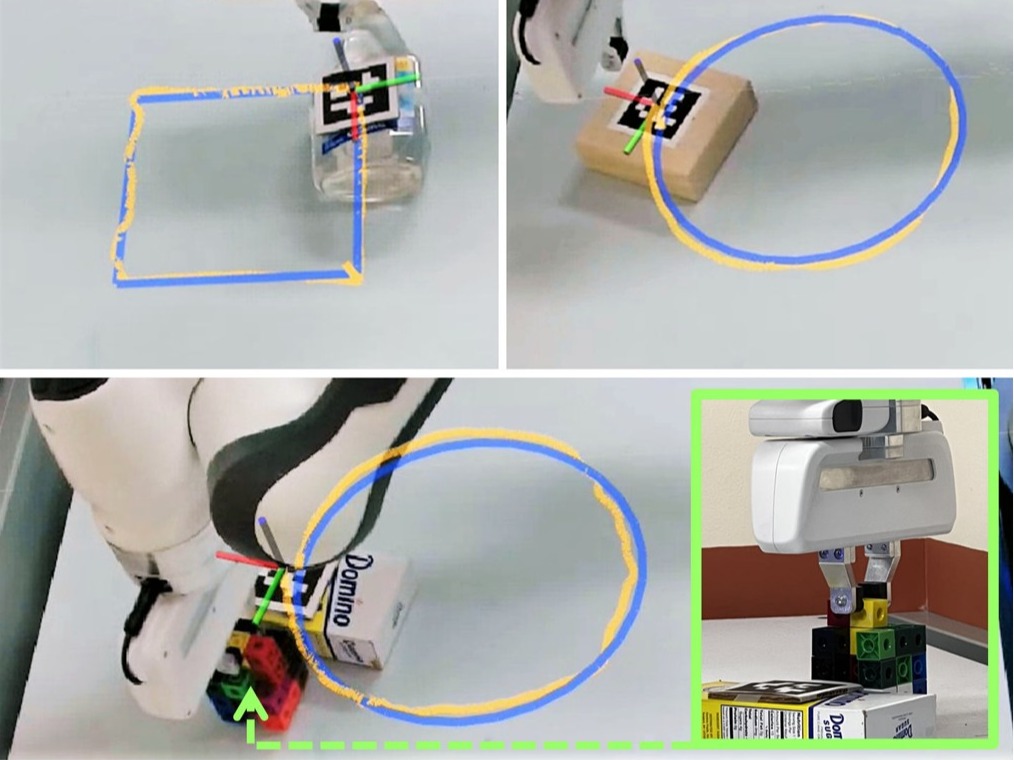

Collision-Inclusive Manipulation Planning for Occluded Object Grasping via Compliant Robot Motions

Robotic manipulation research has investigated contact-rich problems and strategies that require robots to intentionally collide with their environment, to accomplish tasks that cannot be handled by traditional collision-free solutions. By enabling compliant robot motions, collisions between the robot and its environment become more tolerable and can thus be exploited, but more physical uncertainties are introduced. To address contact-rich problems such as occluded object grasping while handling the involved uncertainties, we propose a collision-inclusive planning framework that can transition the robot to a desired task configuration via roughly modeled collisions absorbed by Cartesian impedance control. By strategically exploiting the environmental constraints and exploring inside a manipulation funnel formed by task repetitions, our framework can effectively reduce physical and perception uncertainties. With real-world evaluations on both single-arm and dual-arm setups, we show that our framework is able to efficiently address various realistic occluded grasping problems where a feasible grasp does not initially exist.

@article{ren2025collision,

title={Collision-Inclusive Manipulation Planning for Occluded Object Grasping via Compliant Robot Motions},

author={Ren, Kejia and Wang, Gaotian and Morgan, Andrew S. and Hang, Kaiyu},

journal={IEEE Robotics and Automation Letters},

year={2025},

volume={10},

number={9},

pages={9120-9127},

doi={10.1109/LRA.2025.3592060}

}

Caging in Time: A Framework for Robust Object Manipulation under Uncertainties and Limited Robot Perception

Real-world object manipulation has been commonly challenged by physical uncertainties and perception limitations. Being an effective strategy, while caging configuration-based manipulation frameworks have successfully provided robust solutions, they are not broadly applicable due to their strict requirements on the availability of multiple robots, widely distributed contacts, or specific geometries of robots or objects. Building upon previous sensorless manipulation ideas and uncertainty handling approaches, this work proposes a novel framework termed Caging in Time to allow caging configurations to be formed even with one robot engaged in a task. This concept leverages the insight that while caging requires constraining the object's motion, only part of the cage actively contacts the object at any moment. As such, by strategically switching the end-effector configuration and collapsing it in time, we form a cage with its necessary portion active whenever needed. We instantiate our approach on challenging quasi-static and dynamic manipulation tasks, showing that Caging in Time can be achieved in general cage formulations including geometry-based and energy-based cages. With extensive experiments, we show robust and accurate manipulation, in an open-loop manner, without requiring detailed knowledge of the object geometry or physical properties, or real-time accurate feedback on the manipulation states. In addition to being an effective and robust open-loop manipulation solution, Caging in Time can be a supplementary strategy to other manipulation systems affected by uncertain or limited robot perception.

@article{wang2025caging,

title={Caging in time: A framework for robust object manipulation under uncertainties and limited robot perception},

author={Wang, Gaotian and Ren, Kejia and Morgan, Andrew S and Hang, Kaiyu},

journal={The International Journal of Robotics Research},

pages={02783649251343926},

year={2025},

publisher={SAGE Publications Sage UK: London, England}

}

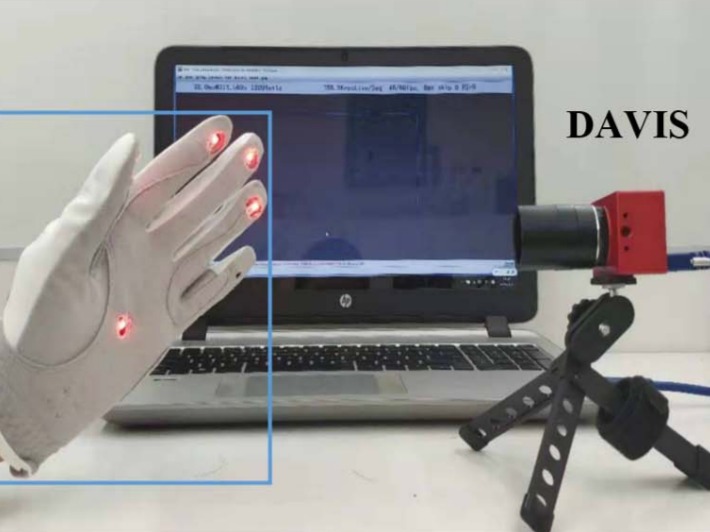

A Novel Illumination-Robust Hand Gesture Recognition System With Event-Based Neuromorphic Vision Sensor

The hand gesture recognition system is a noncontact and intuitive communication approach, which, in turn, allows for natural and efficient interaction. This work focuses on developing a novel and robust gesture recognition system, which is insensitive to environmental illumination and background variation. In the field of gesture recognition, standard vision sensors, such as CMOS cameras, are widely used as the sensing devices in state-ofthe-art hand gesture recognition systems. However, such cameras depend on environmental constraints, such as lighting variability and the cluttered background, which significantly deteriorates their performances. In this work, we propose an event-based gesture recognition system to overcome the detriment constraints and enhance the robustness of the recognition performance. Our system relies on a biologically inspired neuromorphic vision sensor that has microsecond temporal resolution, high dynamic range, and low latency. The sensor output is a sequence of asynchronous events instead of discrete frames. To interpret the visual data, we utilize a wearable glove as an interaction device with five high-frequency (>100 Hz) active LED markers (ALMs), representing fingers and palm, which are tracked precisely in the temporal domain using a restricted spatiotemporal particle filter algorithm. The latency of the sensing pipeline is negligible compared with the dynamics of the environment as the sensor's temporal resolution allows us to distinguish high frequencies precisely. We design an encoding process to extract features and adopt a lightweight network to classify the hand gestures. The recognition accuracy of our system is comparable to the state-of-the-art methods. To study the robustness of the system, experiments considering illumination and background variations are performed, and the results show that our system is more robust than the state-of-the-art deep learning-based gesture recognition systems.

@article{chen2021novel,

title={A novel illumination-robust hand gesture recognition system with event-based neuromorphic vision sensor},

author={Chen, Guang and Xu, Zhongcong and Li, Zhijun and Tang, Huajin and Qu, Sanqing and Ren, Kejia and Knoll, Alois},

journal={IEEE Transactions on automation science and engineering},

volume={18},

number={2},

pages={508--520},

year={2021},

publisher={IEEE}

}

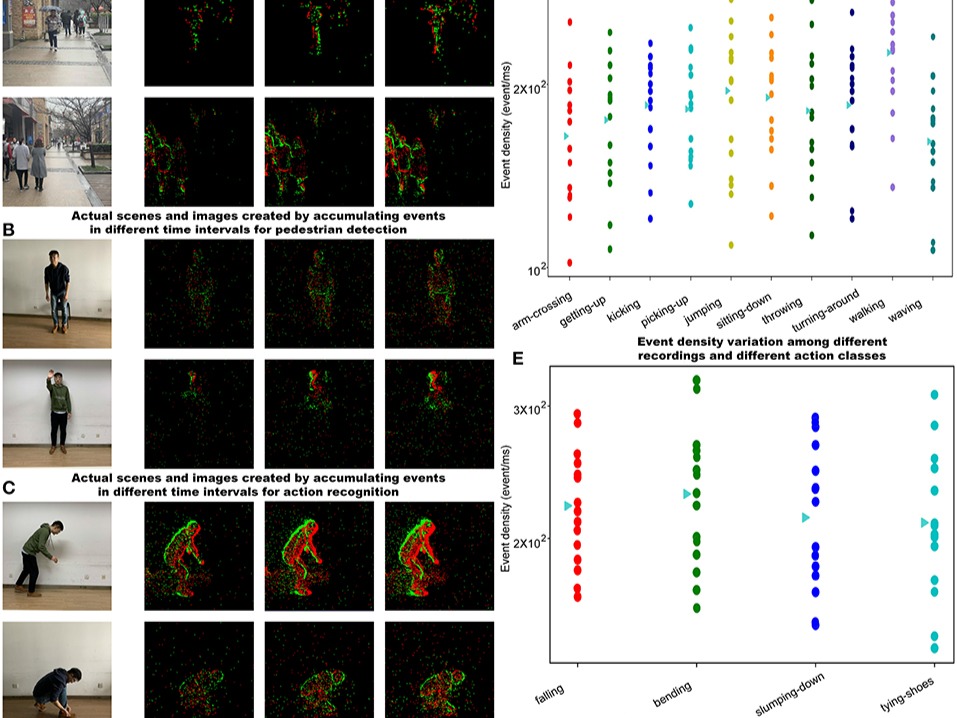

Neuromorphic Vision Datasets for Pedestrian Detection, Action Recognition, and Fall Detection

@article{miao2019neuromorphic,

title={Neuromorphic vision datasets for pedestrian detection, action recognition, and fall detection},

author={Miao, Shu and Chen, Guang and Ning, Xiangyu and Zi, Yang and Ren, Kejia and Bing, Zhenshan and Knoll, Alois},

journal={Frontiers in neurorobotics},

volume={13},

pages={38},

year={2019},

publisher={Frontiers Media SA}

}

B4P: Simultaneous Grasp and Motion Planning for Object Placement via Parallelized Bidirectional Forests and Path Repair

Robot pick and place systems have traditionally decoupled grasp, placement, and motion planning to build sequential optimization pipelines with an assumption that the individual components will be able to work together. However, this separation introduces sub-optimality, as grasp choices may limit, or even prohibit, feasible motions for a robot to reach the target placement pose, particularly in cluttered environments with narrow passages. To this end, we propose a forest-based planning framework to simultaneously find grasp configurations and feasible robot motions that explicitly satisfy downstream placement configurations paired with the selected grasps. Our proposed framework leverages a bidirectional sampling-based approach to build a start forest, rooted at the feasible grasp regions, and a goal forest, rooted at the feasible placement regions, to facilitate the search through randomly explored motions that connect valid pairs of grasp and placement trees. We demonstrate that the framework's inherent parallelism enables superlinear speedup, making it scalable for applications for redundant robot arms, e.g., 7 DoF, to work efficiently in highly cluttered environments. Extensive experiments in simulation demonstrate the robustness and efficiency of the proposed framework in comparison with multiple baselines under diverse scenarios.

@article{leebron2025b4p,

title={B4P: Simultaneous Grasp and Motion Planning for Object Placement via Parallelized Bidirectional Forests and Path Repair},

author={Leebron, Benjamin H and Ren, Kejia and Chen, Yiting and Hang, Kaiyu},

journal={arXiv preprint arXiv:2504.04598},

year={2025}

}

UNO Push: Unified Nonprehensile Object Pushing via Non-Parametric Estimation and Model Predictive Control

Nonprehensile manipulation through precise pushing is an essential skill that has been commonly challenged by perception and physical uncertainties, such as those associated with contacts, object geometries, and physical properties. For this, we propose a unified framework that jointly addresses system modeling, action generation, and control. While most existing approaches either heavily rely on a priori system information for analytic modeling, or leverage a large dataset to learn dynamic models, our framework approximates a system transition function via non-parametric learning only using a small number of exploratory actions (ca. 10). The approximated function is then integrated with model predictive control to provide precise pushing manipulation. Furthermore, we show that the approximated system transition functions can be robustly transferred across novel objects while being online updated to continuously improve the manipulation accuracy. Through extensive experiments on a real robot platform with a set of novel objects and comparing against a state-of-the-art baseline, we show that the proposed unified framework is a light-weight and highly effective approach to enable precise pushing manipulation all by itself. Our evaluation results illustrate that the system can robustly ensure millimeter-level precision and can straightforwardly work on any novel object.

@inproceedings{wang2024uno,

title={UNO Push: Unified Nonprehensile Object Pushing via Non-Parametric Estimation and Model Predictive Control},

author={Wang, Gaotian and Ren, Kejia and Hang, Kaiyu},

booktitle={2024 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

pages={9893--9900},

year={2024},

organization={IEEE}

}

Interactive Robot-Environment Self-Calibration via Compliant Exploratory Actions

Calibrating robots into their workspaces is crucial for manipulation tasks. Existing calibration techniques often rely on sensors external to the robot (cameras, laser scanners, etc.) or specialized tools. This reliance complicates the calibration process and increases the costs and time requirements. Furthermore, the associated setup and measurement procedures require significant human intervention, which makes them more challenging to operate. Using the built-in forcetorque sensors, which are nowadays a default component in collaborative robots, this work proposes a self-calibration framework where robot-environmental spatial relations are automatically estimated through compliant exploratory actions by the robot itself. The self-calibration approach converges, verifies its own accuracy, and terminates upon completion, autonomously purely through interactive exploration of the environment's geometries. Extensive experiments validate the effectiveness of our self-calibration approach in accurately establishing the robot-environment spatial relationships without the need for additional sensing equipment or any human intervention.

@inproceedings{chanrungmaneekul2024interactive,

title={Interactive Robot-Environment Self-Calibration via Compliant Exploratory Actions},

author={Chanrungmaneekul, Podshara and Ren, Kejia and Grace, Joshua T and Dollar, Aaron M and Hang, Kaiyu},

booktitle={2024 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

pages={8307--8314},

year={2024},

organization={IEEE}

}

RISeg: Robot Interactive Object Segmentation via Body Frame-Invariant Features

In order to successfully perform manipulation tasks in new environments, such as grasping, robots must be proficient in segmenting unseen objects from the background and/or other objects. Previous works perform unseen object instance segmentation (UOIS) by training deep neural networks on large-scale data to learn RGB/RGB-D feature embeddings, where cluttered environments often result in inaccurate segmentations. We build upon these methods and introduce a novel approach to correct inaccurate segmentation, such as undersegmentation, of static image-based UOIS masks by using robot interaction and a designed body frame-invariant feature. We demonstrate that the relative linear and rotational velocities of frames randomly attached to rigid bodies due to robot interactions can be used to identify objects and accumulate corrected object-level segmentation masks. By introducing motion to regions of segmentation uncertainty, we are able to drastically improve segmentation accuracy in an uncertaintydriven manner with minimal, non-disruptive interactions (ca. 2-3 per scene). We demonstrate the effectiveness of our proposed interactive perception pipeline in accurately segmenting cluttered scenes by achieving an average object segmentation accuracy rate of 80.7%, an increase of 28.2% when compared with other state-of-the-art UOIS methods.

@inproceedings{qian2024riseg,

title={RISeg: Robot interactive object segmentation via body frame-invariant features},

author={Qian, Howard H and Lu, Yangxiao and Ren, Kejia and Wang, Gaotian and Khargonkar, Ninad and Xiang, Yu and Hang, Kaiyu},

booktitle={2024 IEEE International Conference on Robotics and Automation (ICRA)},

pages={13954--13960},

year={2024},

organization={IEEE}

}

Non-Parametric Self-Identification and Model Predictive Control of Dexterous In-Hand Manipulation

Building hand-object models for dexterous inhand manipulation remains a crucial and open problem. Major challenges include the difficulty of obtaining the geometric and dynamical models of the hand, object, and time-varying contacts, as well as the inevitable physical and perception uncertainties. Instead of building accurate models to map between the actuation inputs and the object motions, this work proposes to enable the hand-object systems to continuously approximate their local models via a self-identification process where an underlying manipulation model is estimated through a small number of exploratory actions and non-parametric learning. With a very small number of data points, as opposed to most data-driven methods, our system self-identifies the underlying manipulation models online through exploratory actions and non-parametric learning. By integrating the selfidentified hand-object model into a model predictive control framework, the proposed system closes the control loop to provide high accuracy in-hand manipulation. Furthermore, the proposed self-identification is able to adaptively trigger online updates through additional exploratory actions, as soon as the self-identified local models render large discrepancies against the observed manipulation outcomes. We implemented the proposed approach on a sensorless underactuated Yale Model O hand with a single external camera to observe the object's motion. With extensive experiments, we show that the proposed self-identification approach can enable accurate and robust dexterous manipulation without requiring an accurate system model nor a large amount of data for offline training.

@inproceedings{chanrungmaneekul2023non,

title={Non-parametric self-identification and model predictive control of dexterous in-hand manipulation},

author={Chanrungmaneekul, Podshara and Ren, Kejia and Grace, Joshua T and Dollar, Aaron M and Hang, Kaiyu},

booktitle={2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

pages={8743--8750},

year={2023},

organization={IEEE}

}

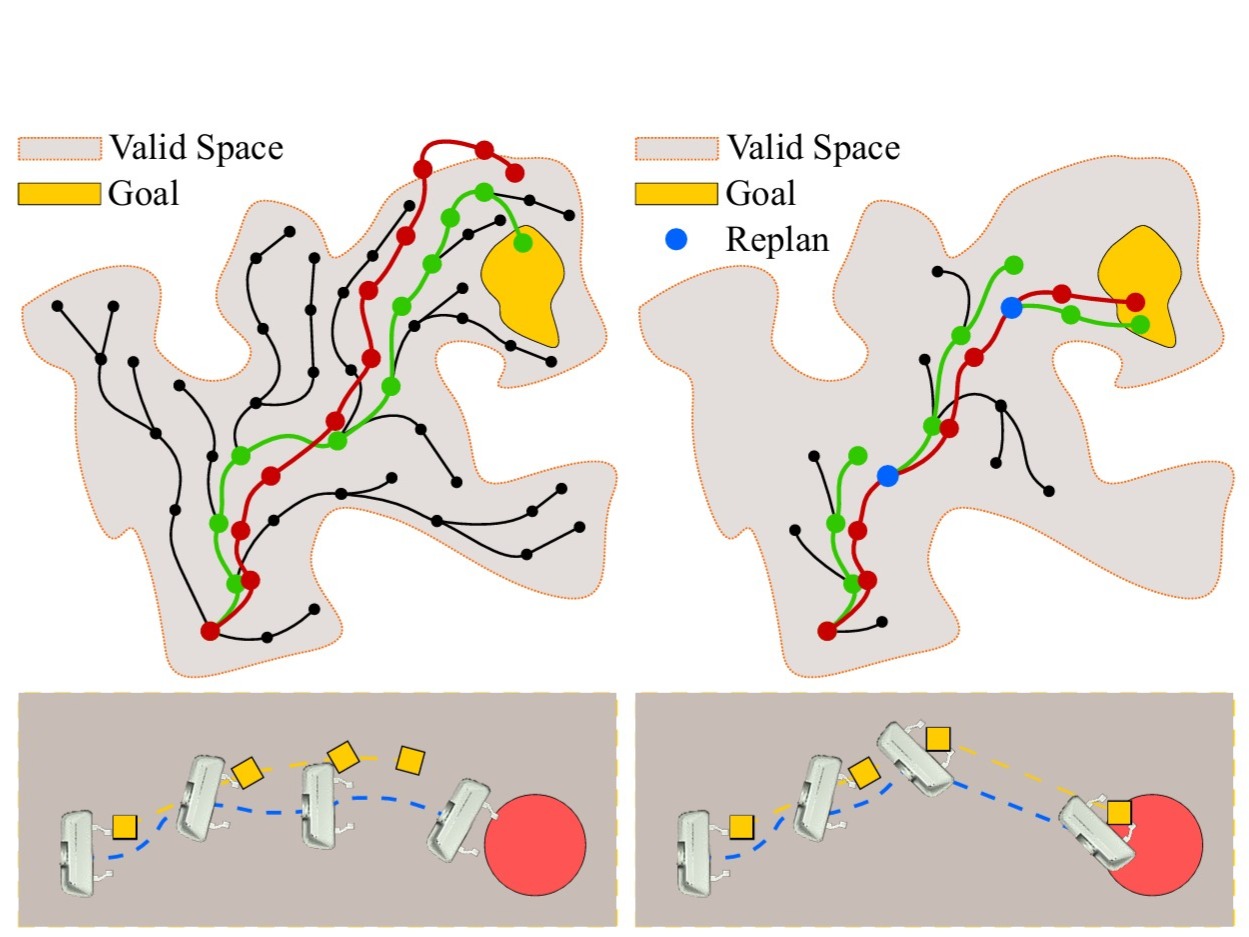

Kinodynamic Rapidly-exploring Random Forest for Rearrangement-Based Nonprehensile Manipulation

Rearrangement-based nonprehensile manipulation still remains as a challenging problem due to the high-dimensional problem space and the complex physical uncertainties it entails. We formulate this class of problems as a coupled problem of local rearrangement and global action optimization by incorporating free-space transit motions between constrained rearranging actions. We propose a forest-based kinodynamic planning framework to concurrently search in multiple problem regions, so as to enable global exploration of the most task-relevant subspaces, while facilitating effective switches between local rearranging actions. By interleaving dynamic horizon planning and action execution, our framework can adaptively handle real-world uncertainties. With extensive experiments, we show that our framework significantly improves the planning efficiency and manipulation effectiveness while being robust against various uncertainties.

@inproceedings{ren2023kinodynamic,

title={Kinodynamic rapidly-exploring random forest for rearrangement-based nonprehensile manipulation},

author={Ren, Kejia and Chanrungmaneekul, Podshara and Kavraki, Lydia E and Hang, Kaiyu},

booktitle={2023 IEEE International Conference on Robotics and Automation (ICRA)},

pages={8127--8133},

year={2023},

organization={IEEE}

}

Rearrangement-Based Manipulation via Kinodynamic Planning and Dynamic Planning Horizons

Robot manipulation in cluttered environments of-ten requires complex and sequential rearrangement of multiple objects in order to achieve the desired reconfiguration of the target objects. Due to the sophisticated physical interactions involved in such scenarios, rearrangement-based manipulation is still limited to a small range of tasks and is especially vulnerable to physical uncertainties and perception noise. This paper presents a planning framework that leverages the efficiency of sampling-based planning approaches, and closes the manipulation loop by dynamically controlling the planning horizon. Our approach interleaves planning and execution to progressively approach the manipulation goal while correcting any errors or path deviations along the process. Meanwhile, our framework allows the definition of manipulation goals without requiring explicit goal configurations, enabling the robot to flexibly interact with all objects to facilitate the manipulation of the target ones. With extensive experiments both in simulation and on a real robot, we evaluate our framework on three manipulation tasks in cluttered environments: grasping, relocating, and sorting. In comparison with two baseline approaches, we show that our framework can significantly improve planning efficiency, robustness against physical uncertainties, and task success rate under limited time budgets.

@inproceedings{ren2022rearrangement,

title={Rearrangement-based manipulation via kinodynamic planning and dynamic planning horizons},

author={Ren, Kejia and Kavraki, Lydia E and Hang, Kaiyu},

booktitle={2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

pages={1145--1152},

year={2022},

organization={IEEE}

}

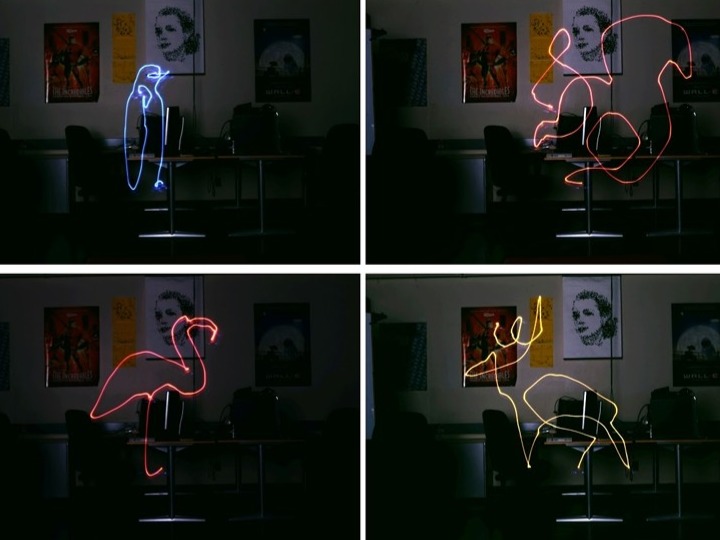

Single Stroke Aerial Robot Light Painting

This paper investigates trajectory generation alternatives for creating single-stroke light paintings with a small quadrotor robot. We propose to reduce the cost of a minimum snap piecewise polynomial quadrotor trajectory passing through a set of waypoints by displacing those waypoints towards or away from the camera while preserving their projected position. It is in regions of high curvature, where waypoints are close together, that we make modifications to reduce snap, and we evaluate two different strategies: one that uses a full range of depths to increase the distance between close waypoints, and another that tries to keep the final set of waypoints as close to the original plane as possible. Using a variety of one-stroke animal illustrations as targets, we evaluate and compare the cost of different optimized trajectories, and discuss the qualitative and quantitative quality of flights captured in long exposure photographs.

@inproceedings{ren2019single,

title={Single stroke aerial robot light painting},

author={Ren, Kejia and Kry, Paul G},

booktitle={Proceedings of the 8th ACM/Eurographics Expressive Symposium},

pages={61--67},

year={2019}

}